Some posts and data worth taking a look at before you read this. These came out since the o3 release in late December and are the basis for what I’m about to talk about:

All we need to build super intelligence are bigger computers

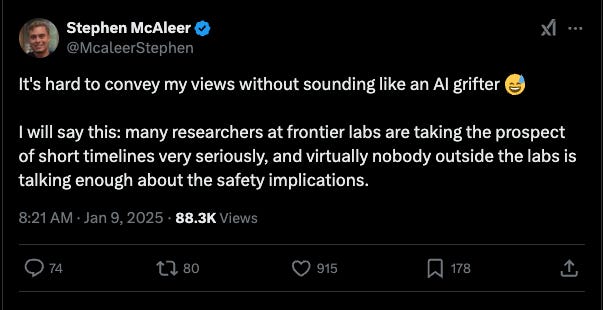

The first part of this post starts out kind of technical, but I’m going to try to explain it in both technical and then also simpler terms so that most folks can understand it. I think the above graph shows a massive breakthrough, that no one is talking about, but should be.

The breakthrough comes down to one thing: an algorithm that can scale inference time compute in a reasonable time complexity, thus scaling reasoning. Sounds like a really complicated concept, but the essence of it is that we taught computers to “think“, like for real. OpenAI has been hinting at this for a while, ever since Strawberry, their new reasoner, leaked.

When computer scientists talk about algorithms, they often talk about them in terms of what’s called time complexity. In the most basic of terms, time complexity is the number of cycles the computer takes to compute a solution. Things like sorting a list of your favorite foods might be relatively simple (what’s called logarithmic) and things like calculating all of the combinations of a list of items might be really hard (what’s called factorial O(!n)).

When an LLM like Strawberry “thinks“ it’s really searching, something roughly equivalent to what we call an A* algorithm (O(|V|)=O(b^d)) for searching complex graph data structures. What is it searching? All of the potential solutions to a problem. You can really equate this to thinking about a really hard problem for a long time. You think about all of the possible solutions, and reason through what might be correct. Smart people (like smart LLMs) are better able to get to good solutions.

So, what’s the big deal? What this graph shows, is that OpenAI (and probably others) were able to come up with an algorithm that can not only reason but is also efficient and bounded by some reasonable time complexity, meaning we can just scale compute and it gets smarter, maybe a lot smarter.

All we need to build super intelligence is bigger computers

So what?

I equate this whole situation to knowing about Covid circa February 20’. You would go around telling all of your friends that the world was about to end and sound like a crazy person. A few select, well-known, people knew and marked tweets as “I told you so”.

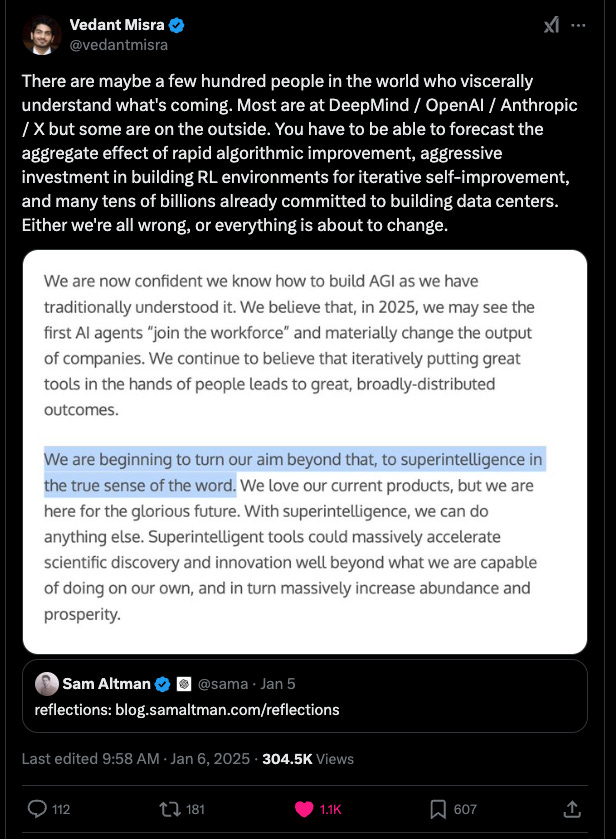

Basically, this is a big deal, a really really big deal, probably on a scale a couple of orders of magnitude bigger than covid. An event that on the order of low tens of thousands of people really understand today it if we’re lucky. Maybe low thousands (even hundreds) who have really thought about it deeply and are close enough to the technology to understand the impact.

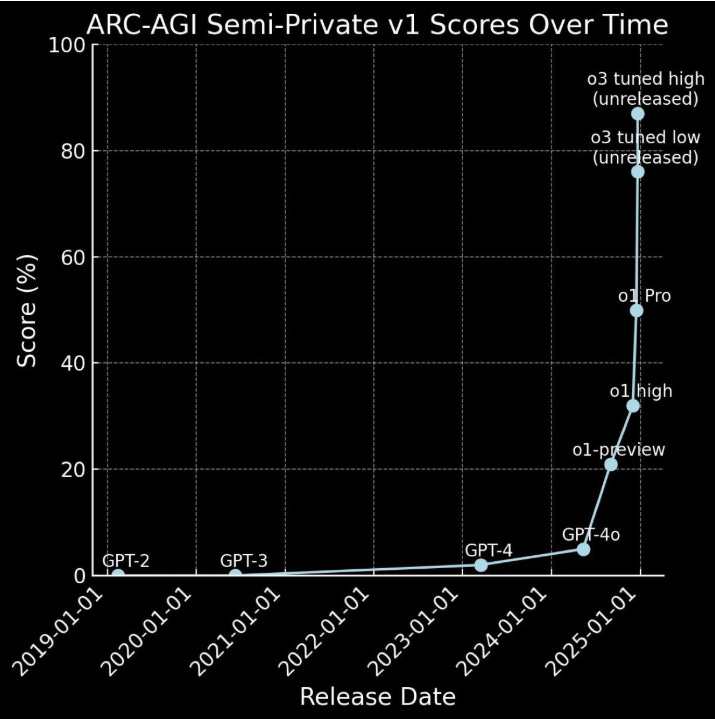

How does life change? Essentially, all of the “reasoning“ work that humans currently do effectively goes away, and all we’re left with is feeding context to the machines. I just asked ChatGPT to come up with a list of industries by GDP and then estimate how much of that is reasoning work, you can think of these as mostly being automated away, or at least a broad swath of the work:

When you add this up, even if chatgpt is just directionally right, and mostly wrong, it’s like roughly half of the current human workforce is about to automated, potentially imminently. That’s an insane proposition. Again, people who come out and say this are going to sound like crazy people.

To keep riffing on just the AGI scenario, what we get is a world where there’s an extreme supply shock on knowledge work (with some time to implement of course). Humans will be mostly feeding context to machines that then solve the problems. Downstream of that, our systems for doing things like producing goods, food etc, are already very efficient, meaning that everything will become very, very cheap. Healthcare, professional services, software etc will be cheap, goods will be even cheaper too because the workforce doing knowledge work can now work on physical goods problems, we’ll probably need some form of basic UBI. It’s unclear.

Ok, cool, now, that isn’t even the part that’s the big deal

The big deal is that that curve likely doesn’t stop. We can just scale compute and the machines get smarter. Smarter than the smartest humans. Probably, by a lot.

The Event Horizon

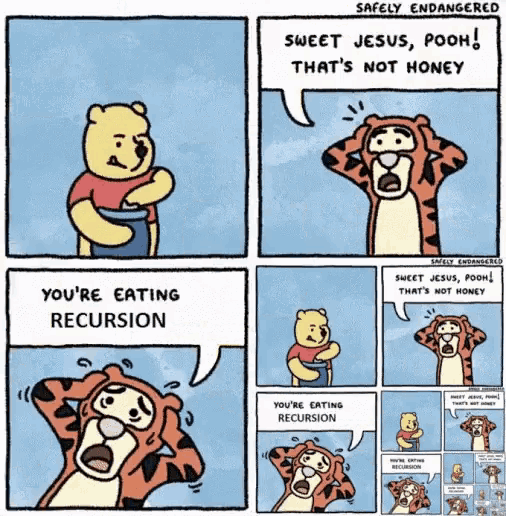

With o3, we may be close to a point where the AI is better at AI research than the humans are. This means that the AI can “recursively self-improve”. Below is a good meme on recursion for your entertainment:

Not only will we be scaling our compute and therefore reasoning ability, but also the algorithms themselves will be making themselves better. It’s a scenario called fast-takeoff, meaning we could very quickly scale to superintelligence.

This moment, when the computers start improving themselves, is called the event horizon. A point where we can’t go back, and don’t exactly know what’s on the other side. The truth is, a lot of smart people have thought a lot about what this means, and truthfully, we can only guess what will happen. Machines smarter than humans have never existed. Machines that can make themselves smarter have also never existed. It’s completely new territory for us. Most consensus arguments think this will be most impactful technology humanity will ever create. It’s similar to inventing God.

Timelines

The AI researchers make this whole thing sound imminent. In some ways, superhuman reasoners probably are very close to being a thing (this year).

Deployment is a different story. It’ll take time for these models to be deployed, but the question is not an “if“ it’s a “when“. Probably sooner than we expect. The reasoners will also help us functionally deploy them quite quickly.

The other problem is cost - these models are very expensive.

The good news is that scaling compute is a super exponent. I could try to pull a chart of costs, but a GPT4 quality model is like a factor of 100 or 1000 cheaper than it used to be. What is very expensive now will be a lot cheaper in the near future. Plus, if the machine improves the algorithm, unclear how much gains we can get there.

Sci Fi

The rest of this post bridges into the world of scifi, mostly because it’s the only comparable mental framework we have for talking about this stuff. I’m also just a nerd lol. This might sound a little odd, and if it was just me saying it, a little off base. What scares me is it means these frontier researchers also need to be equally off base for it to be false.

The first scene I compare this to is the final scene from interstellar where Coop the main character falls into gargauntua, the black hole.

Black holes have a similar event horizon concept, where there’s a point so close to the mass, that the gravity is so strong even light can’t escape. No one on earth, even the smartest physicists today, really know what happens inside. It’s similar to AI, where no computer scientists can predict what’s going to happen after recursive self-improvement. In the movie, the main character Coop sees what’s there:

What he discovers inside, is that it’s only love, the love for his daughter, that can transcend space and time. He uses it to save humanity.

The other data point from scifi that I think is interesting (and also significantly funnier) is this scene from Hitchhiker’s Guide to the Galaxy:

The premise is that a hyper-advanced civilization commissions a computer to figure out the answer to the ultimate question: the meaning of life, the universe, and everything. The computer thinks for 7 million years, comes back, and claims the answer is “42“. When the humans complain, “that can’t possibly be the answer!“, the computer says “it would have been simpler of course to know what the actual question was … that’s not a question, only when you know the question will you know what the answer is“.

As we’re inventing these technologies, we need to think about questions of safety, but I think the actual questions are much deeper. It’s going to allow us to explore the universe in ways we never thought possible, and maybe, just maybe, come up with some of the answers that a lot of us have been looking for.

Conclusion

Cute comment from my girlfriend when I tried to describe this whole thing:

Her: “So it’s gonna be like Wall-e world?“

Me: “Yeah, sorta”

Her: “I’m Eve“

Whether or not the AI researchers are right about this my main takeaway is the key here is to lean into our humanity. We’ll see the best and worst of humanity as this emerges, but our humanity is all we have at the end of the day. Either way, my plan is to hang out on X, wear underwear on my head, build cool stuff, and have fun with my friends. That’s what I love to do anyway. I think stressing about normal stuff (jobs, human drama, etc) is kind of irrelevant right now. I’m gonna do another post on what’s next and what matters if this holds true in the coming days. The general shape is it comes down to actualization, community, faith, and making meaning in a new world, a world where a God exists (at least a new kind of God).

Onwards.

References

A bunch of other tweets etc that I’m missing but are linked above