Every Website is Becoming an API

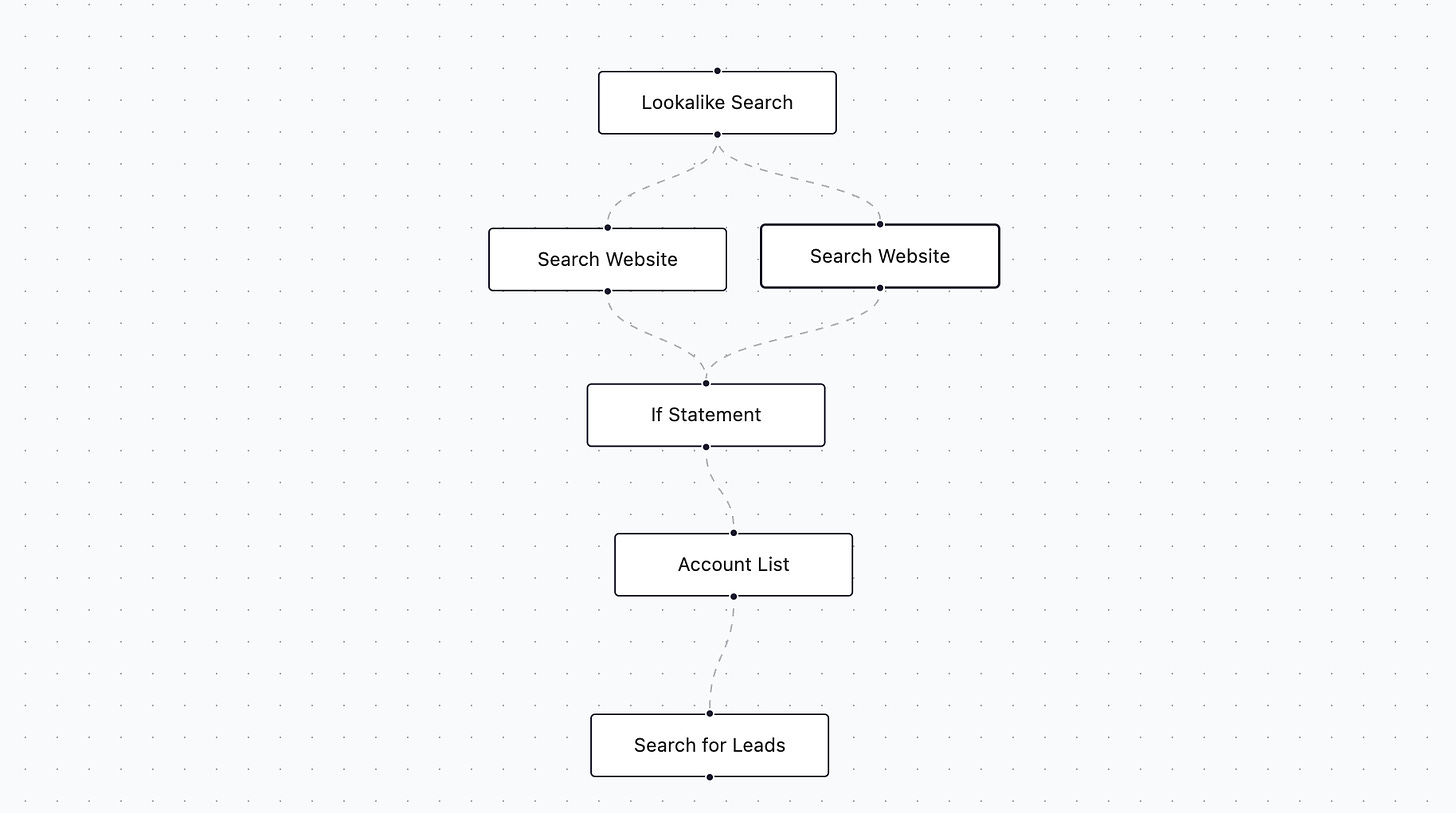

We’re working on the next version of Flyflow, Flyflow V2. The first version of the workflow builder is something we built and launched quite quickly to test the market, validate the idea, and get an initial set of users.

We quickly learned a few things:

The workflow builder is a power user tool. Probably only the top 25% of really technical users actually grok it

Workflows are hard to iterate on because you need to run them before you see results, hard to iterate on prompts

The lead lists that we’re producing are alright but not exactly up to our standards of quality (probably only 30-40% are the quality we want), which probably comes down to the method that we’re using - this comes from stacking filters on filters, the more you filter almost the worse (or smaller) the list gets

With these learnings, we clearly needed to go back to the drawing board on a second version of the UI. What we’re sprinting towards now is something that looks like less of a workflow, and more like a search engine over both structured and unstructured data as well as a mixed semantic search over images and text. A search engine for companies.

This means doing a pretty meaningful rewrite of the app to support a radically different kind of query pattern, along with a lot of data infrastructure to get the data in the right format.

Search engines have three key components:

Crawling and data extraction

Search index

Query interface

For the past few days I’ve been focused on the crawling and indexing backend components to make sure we get the technical choices here that we lock into right for the long term.

Indexing these days is actually pretty advanced. The plan is to use ElasticSearch for this combination of structured, unstructured, and semantic querying. The size of the data will be ~100m records once we scale up the company dataset (there are only so many companies in the world), which is totally reasonable for this system to handle. Elastic provides a lot of nobs to turn when it comes to doing very advanced queries over this data. There are a few other options in this space like Algolia, but they’re less configurable. We’re looking for the power user tool.

With this part of the problem solved, most of the rest of the problem comes down to the data infra and crawling required to actually gather the data we need. One of the unique parts of this problem is that we’re searching for unique insights and extracting them from the web. It’s not enough to pull the content of a website, we want to do things like go specifically to the source for things that answer questions like: who are the logos and case studies on the website? Does the app of the company have onboarding? Do they have a banking license logo displayed on the website?

To do this we need to develop very advanced web crawlers that are both proficient and reliable at their tasks.

This brought me down a rabbit hole of both doing my own experiments with Playwright as well as exploring tools like browserbase for agent-based automations. I did a spike on exploring most of these, and have quickly come to the conclusion that this is still an unsolved problem, with a lot of half-baked solutions out there. The game is definitely not won by Browserbase taking the market.

The big idea here though that is actually much more thought provoking is that these AI automations over browsers are essentially going to turn every website into a programmable API. The amount of value that unlocks for the world, and the idea space of problems that can be solved opens up significantly by exposing every website as essentially what is a API interface. The current solutions probably don’t get it fully right, but I believe that this is a problem that can be solved. When it is, it’s going to be hugely valuable for allowing new applications to be built on previously walled gardens of internet infrastructure.

Where does this leave me? Tbh I want a bit more control which I think leads me to writing my own automation system for Playwright. I’m excited to experiment more in this space though, and to see how it evolves in the coming years.