The Event Horizon Part 2: The Great "AGI" Bubble

A lesson in intrinsic value vs economic value, and a story of how I could have been wrong in my initial post

In January I wrote about the AGI event horizon, this is the second post in the series that talks in detail about the likely economic effects I see from (nearly) free, very smart text and image models of a certain quality which unlock almost ALL of the economic value of AI.

This is what it looks like on the other side of a “AGI” event horizon. The models themselves are capable of recursive self improvement (essentially), but they don’t have the data they need to get better (context). They quickly become almost perfect at the stuff that they can see, but are almost useless at the stuff they can’t. It’s also where I was wrong in my initial post. If something can do all of the reasoning, you still need to give it the data to reason over.

It’s all a lesson in intrinsic vs economic value.

Some data to look at before you read this post:

There has not been a model since GPT-4 that has increased the economic value I have gotten from frontier AI models.

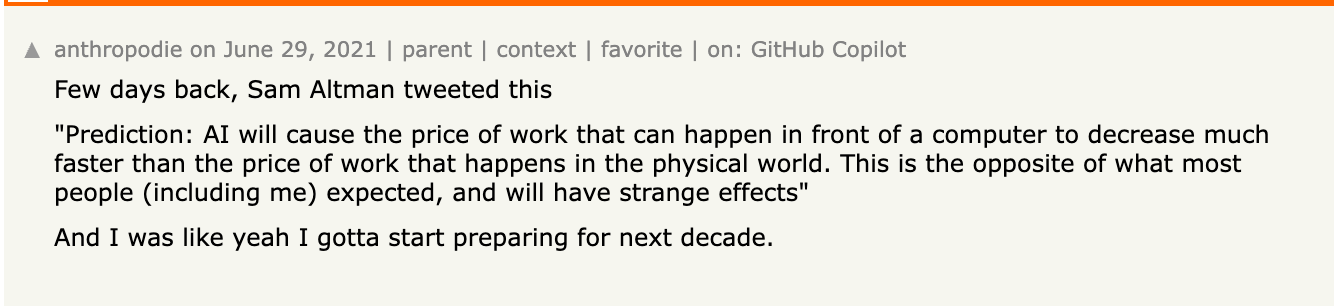

I think it has become increasingly likely that we have all gotten caught up in how valuable something like AI might be that we forgot to measure how valuable it actually is - just because something is intrinsically valuable (AI, LLMs), does NOT mean it will be economically valuable.

Several questions and then my annotated answers:

How have you / people in your life been using LLMs?

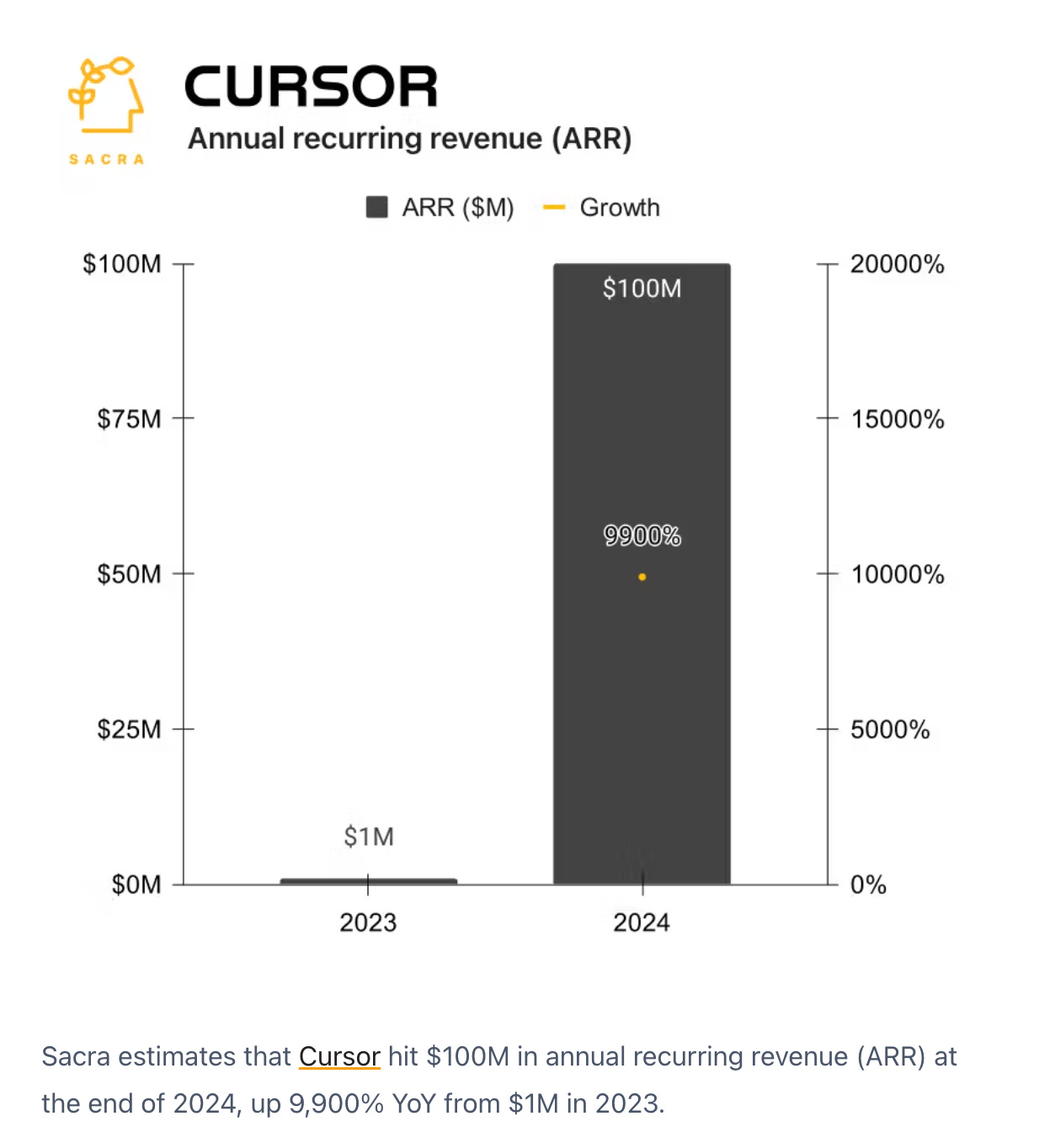

My answer: I use them for code, but they really haven’t gotten much better for coding since GPT-4 and as the models specialize, the coding tools are now nearly free

My friends use them for some research and some coding, just because the model can do magic coding stuff doesn’t mean it’s close to building full applications

What is the most useful LLM tool you’ve used, what does it cost?

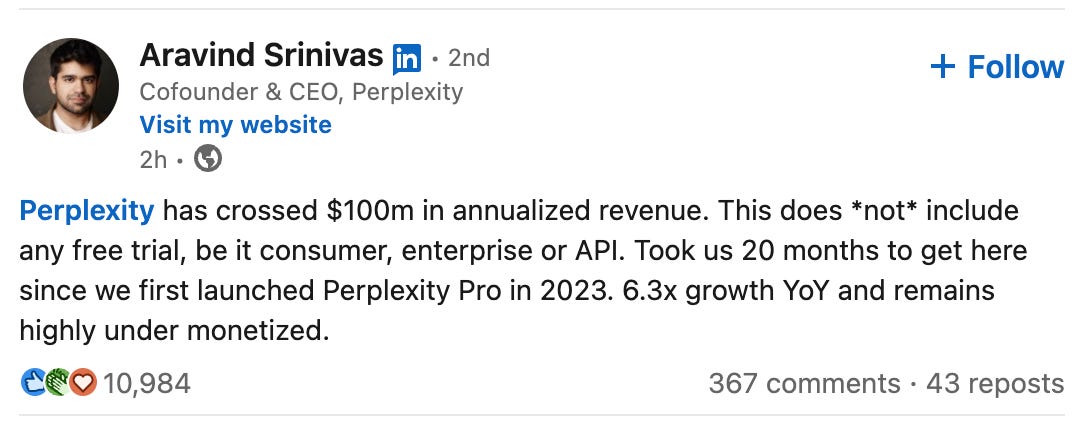

My answer: Probably cursor or the GPT interface - I can get these for free or nearly free now with open source editors and models like deepseek, the agents are good but haven’t increased my speed much for coding

Some people like things like deep research, but that has also become nearly free (grok etc have it with just a twitter subscription)

When you go and talk to a random person in a restaurant, do they use AI models or see value in them?

My answer: Rarely for the average American

Wait, but NVDA -1.23%↓ is so valuable! AI must be the next thing!

Yes, and no, it’s likely the most valuable thing humanity has ever created, but just because it’s intrinsically valuable doesn’t mean it will be economically valuable to the people who created it, just like how the internet wasn’t economically valuable to it’s creators.

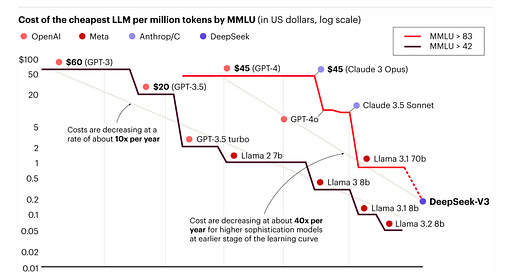

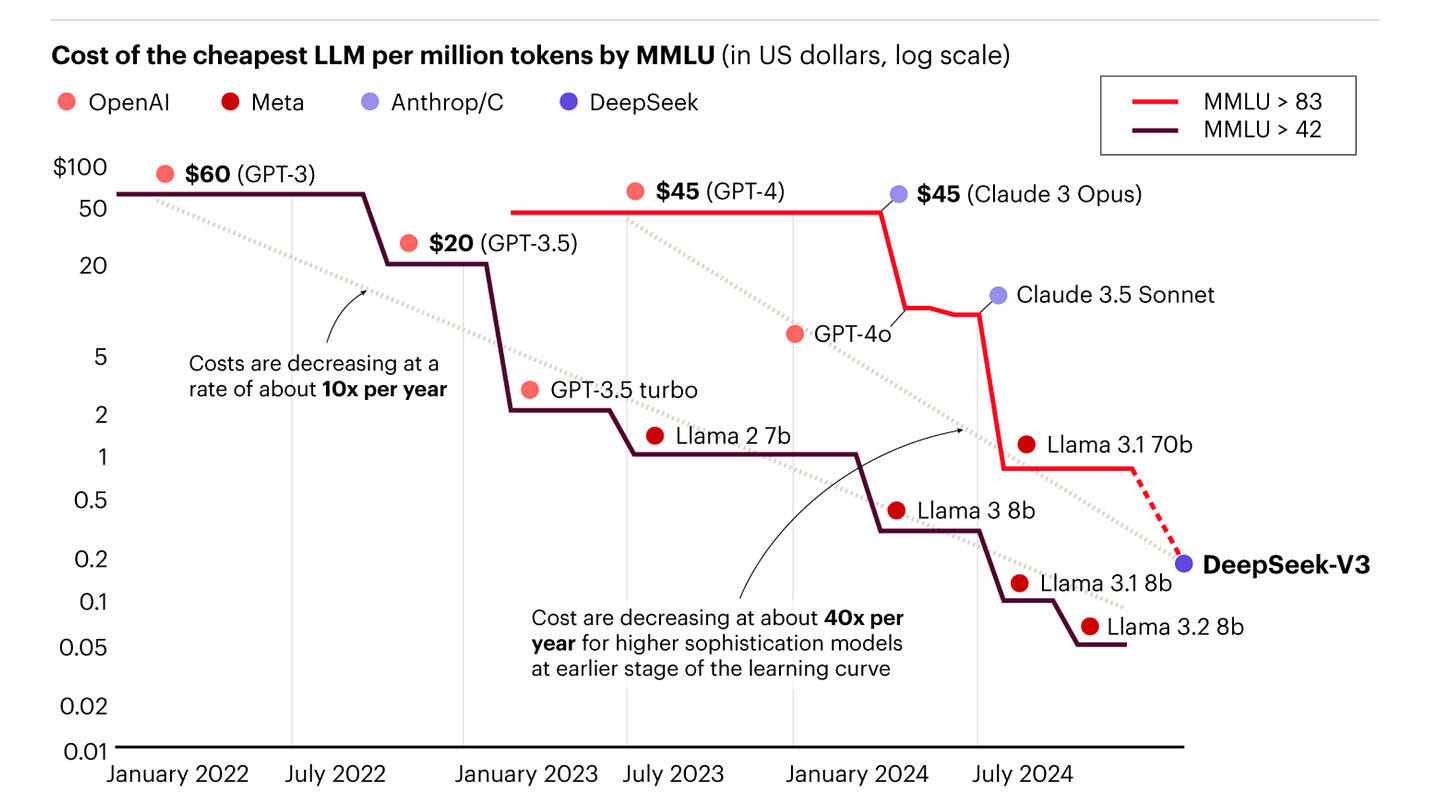

The model makers, sellers of AI snake oil have known all along about the cost compression curve, but they’ve kept selling anyway, to raise more money to get us to this vision of “AGI“ which will for sure, undoubtably, be the most valuable thing (economically) ever. As it turns out, AGI as they’ve been selling it will be (nearly) free, and we’ll all have it running on our laptops in the next 2 years. It’s the most impactful thing that humanity has created so far in it’s short history, and the sell that all of these folks have been doing is a total economic scam.

The models that come after the GPT-4 quality are still very valuable, but only in a very narrow scope. Their value won’t be sellable in any way. The majority of the economic value is just unlocked at being able to write long essays and code, but once you get there, you have kind of maxxed out the economic value of text. It also is clear that the long essays and code models will be almost free to any human on earth.

Our Current Levered Position

When looking at our current position, it’s also helpful to look at history. A good time period to look at is the roaring 20s:

Electrification resulted in HUGE gains in productivity from manufacturing

Many people took a lot of margin in the stock market (debt) to bet on that future

In 1929, the leverage unwound when people weren’t buying more of what was being manufactured, and it crashed into the great depression

Today it looks very similar:

AI is resulting in HUGE gains in productivity from knowledge work

Many people are levered on the future of knowledge work (AI stocks, student debt etc etc)

In 2025/2026 we’re going to have a massive oversupply of knowledge work based systems that outstrips demand

Conclusion

If you look at the stock market, this is playing out quite clearly. So much of the market trades around $NVDA, but people will are only buying on several assumptions:

Tech companies will continue to buy GPUs en mass

The people buying from the tech companies will see the AI models extremely valuable

What they don’t see is that deepseek et al has released inference optimizations that have effectively 20x decreased the cost of the most economically valuable parts of AI, which means that the tech companies DONT NEED more GPUs, which is means that the forward revenue of NVDA -1.23%↓ could crater very quickly, rendering it almost worthless and starting to unravel this bubble. Deepseek supports 200m monthly actives on 2000 GPUs total.

The other thing that most don’t see is while this is happening, simultaneously the value we’ve placed in higher education will erode, because what we’ve trained all of these “well educated“ individuals is in their ability to write and code. Lastly, during covid we continued to lever by printing money to stabilize the economy (more debt).

This likely won’t happen tomorrow (although it could). It’s likely a process that will unravel over years (hopefully gently and not in an aggressive way).

If you look at the 20s, the outcome was incredible for humanity. Incredible productivity and quality of life for most humans. Life got better. This bubble will likely be the exact same, we just have to wait a little while to see how amazing it is, and endure some short term pain.

Similar to the dot-com bubble, when people thought it was “so over“ the best companies were being built. The AI bubble will be very much the same.

AGI is real, it’s just not what we expected. In some ways it’s a lot better than we could have ever imagined, but in others it will be much worse. I’m forever an optimist, but I’m not long the people selling snake oil. I’m long the engineers and entrepreneurs who will realize what it actually is and use it to make an incredible future for all of us.